CINEMA.DATABASE: AWS IMPLEMENTATION

Overview

The cloud deploy of Cinema.Database rearchitects the underlying structure of the app to fit AWS best practices, as well as adds new functionality that interacts directly with an S3 bucket.

Context: Culmination of project work in CareerFoundry’s Cloud Computing for Developers course

Purpose: Develop and deploy an app fully on the AWS cloud platform to gain proficiency in developing in the AWS cloud

Objective: Ensure app architecture is secure, reliable, and efficient, following AWS best practices

AWS Services Used: EC2, VPC, SG, ASG, ALB, AMI, IAM, S3, SDK, Lambda

Method

Migration to the Cloud

The first steps in this project involved migrating the already existing app to the cloud. The app in its entirety is made up of three basic parts: a client site, a backend API, and a database. All of these components would need to be moved to AWS in a configuration that maximized the benefits of using the cloud.

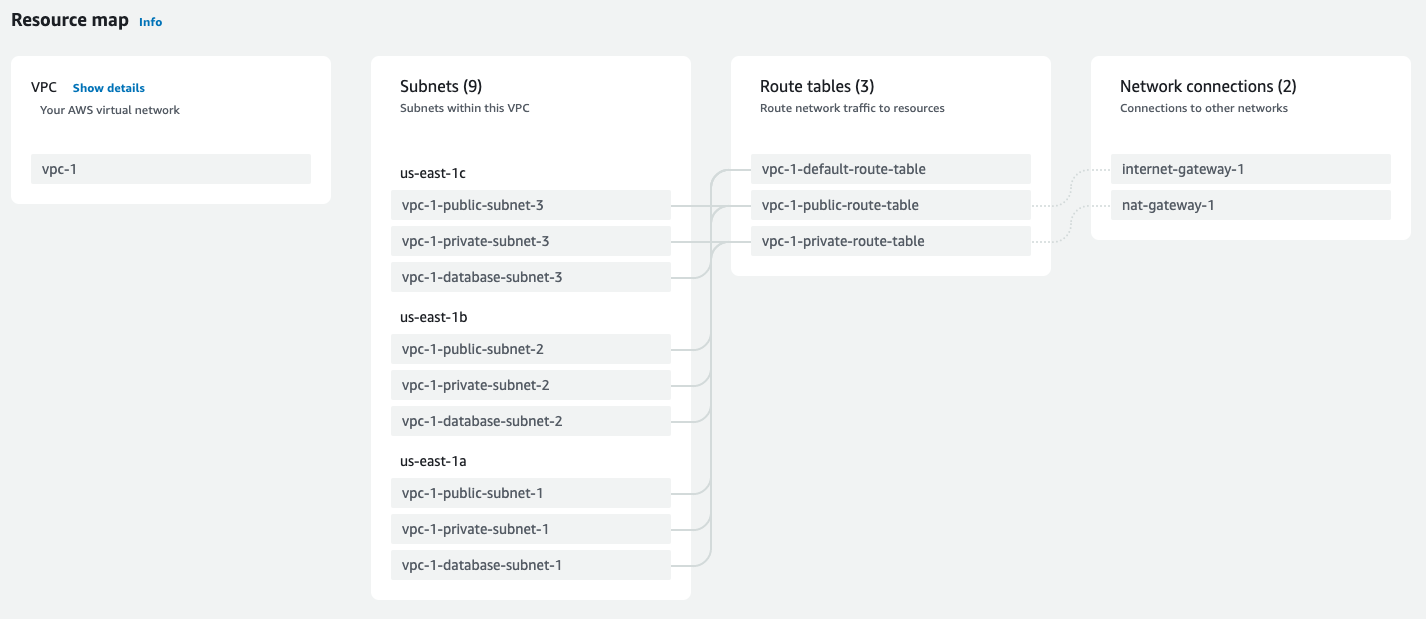

Setup

Before actually deploying anything, I set up a custom VPC where my EC2 instances would run. This allowed me to control ingress and egress to each component of my app by dividing my network into subnets with various levels of access. The VPC was divided into subnets with public access (open ingress and egress), private access (open egress but ingress only from within the VPC), and even more private access for the database (ingress and egress only within the VPC).

Click to enlarge

VPC configuration: 3 public, 3 private, and 3 database subnets. Public subnets route to internet gateway and private subnets route to NAT gateway

Within each of these subdivisions were three subnets (three public, three private, three database), each deployed in different Availability Zones within the US East 1 region where I and my user base were located. The use of mulitple AZs would allow for me to implement a more reliable architecture later on, discussed in detail later in this case study.

Database Deploy

Click to enlarge

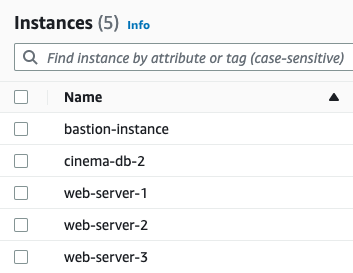

List of all instances running in this VPC for this app. Web servers on private subnets, database instances on database subnet, and bastion instance on public subnet

Starting from the bottom layer, I decided to simply copy my database onto an EC2 instance where it would run as a local MongoDB server. I learned about and considered the pros and cons of other deploy methods, in particular converting the database to the AWS-managed NoSQL service DynamoDB, but I ultimately didn’t use DynamoDB in order to avoid some of the required refactoring and extra time needed to deploy with this method. Since this is a relatively simple app with a simple database, it seemed the best choice to maintain that simplicity.

The EC2 instance housing my database was deployed to a database subnet of my VPC, where the attached security group and lack of gateway prevented any access to the public internet, but allowed certain traffic within the VPC. Following the principle of least privilege, the security group only allowed traffic to and from the EC2 instances housing the API (once they were created and their IPs known) and SSH access from a bastion instance deployed on a public subnet.

Server Deploy

Which brings us to the API (web server) instances. The API was initially deployed to just one instance where it could be configured and copied into an AMI. The creation of this instance and the deployment and configuration of the API were all done through the AWS CLI to better familiarize myself with other methods of interacting with the AWS API beyond the management console.

The API was copied onto the instance, and a few necessary changes were made to the code, such as updating the CORS policy and adding an environment variable that referenced the MongoDB instance I had just made. Finally, the API was configured to run as a server (on port 8081, which you may see in some screenshots).

Refinement

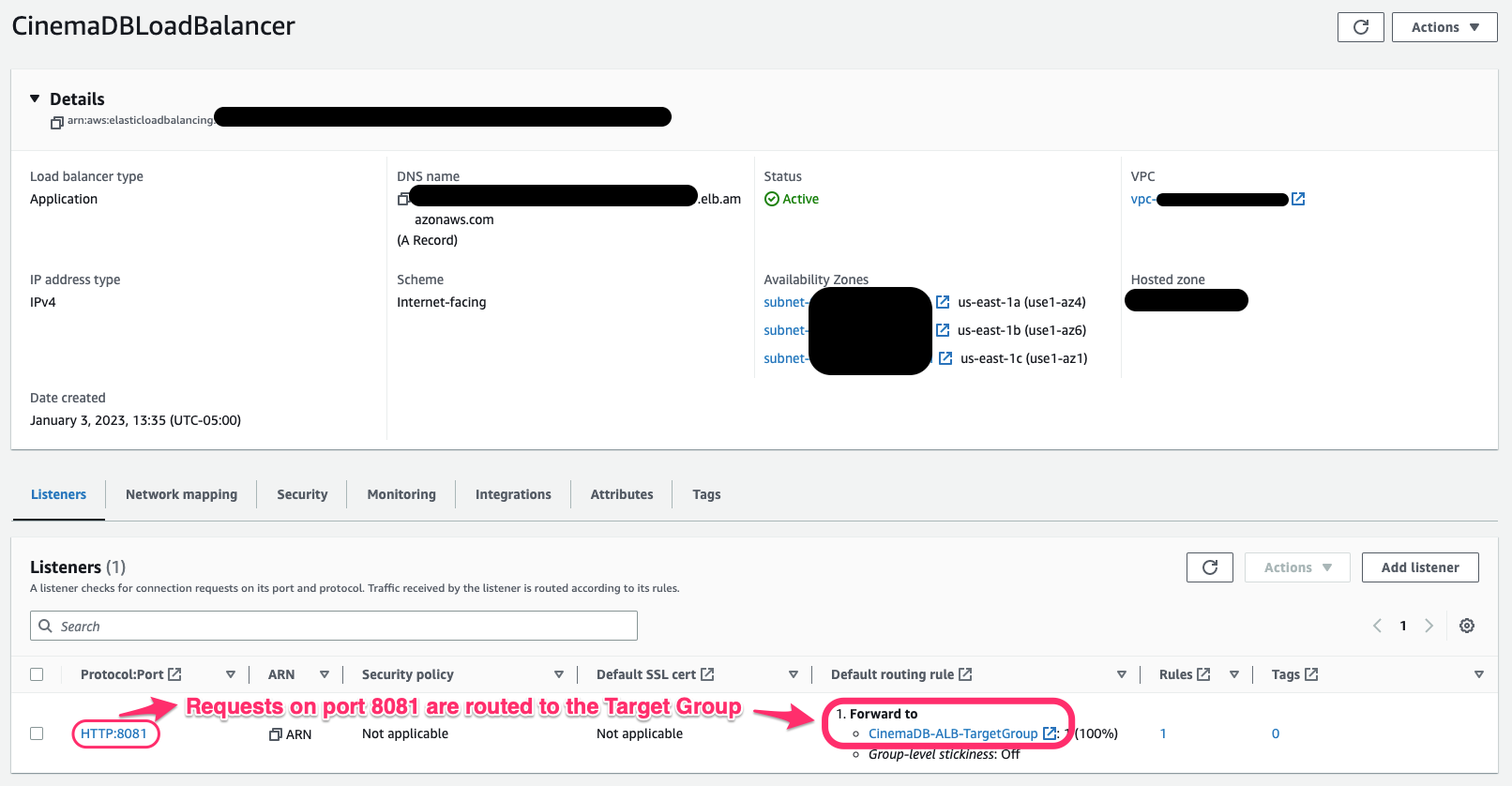

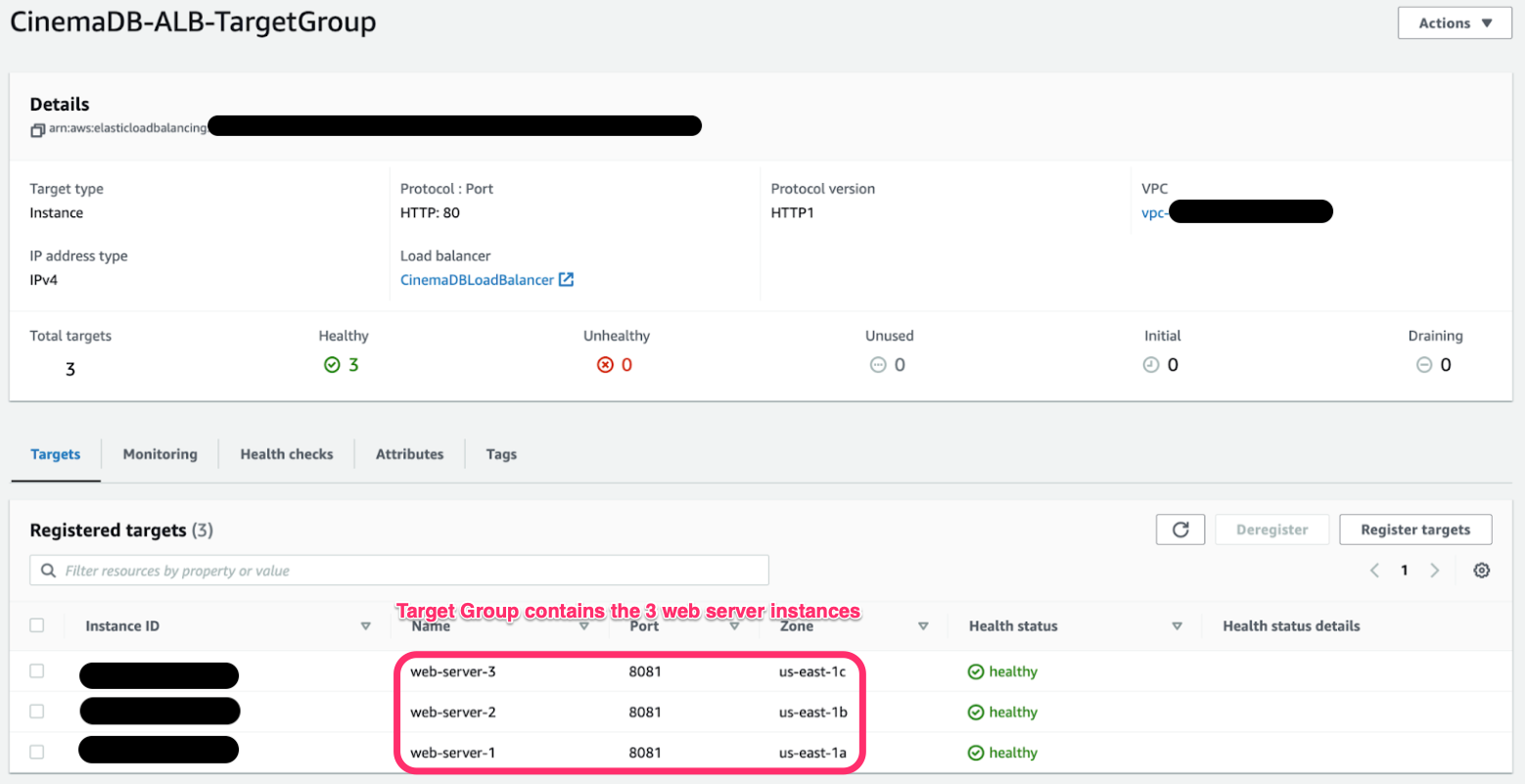

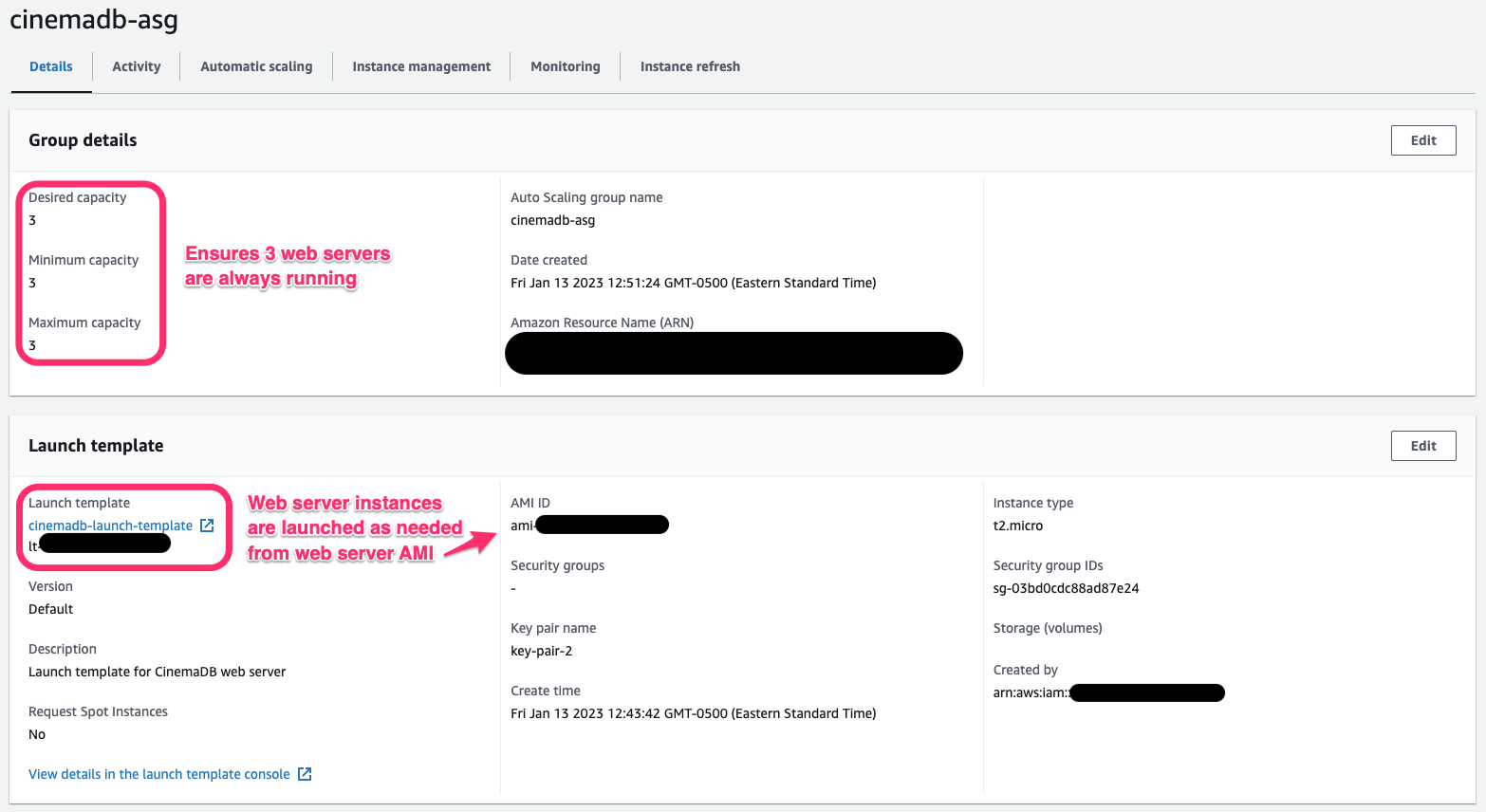

While the backend of the app would have functioned fine in this configuration, I wanted to simulate a real-world deployment as much as possible, which would require additional measures of security and resiliency to failure. So, I followed the architecture suggested by AWS as a best practice, and deployed the web server to an ASG, targeted by an ALB. The 3 redundant web servers in the ASG were deployed in private subnets, so they could receive traffic from the ALB and send/receive traffic to the database, but that was it (besides SSH access from the bastion instance). The ALB could now distribute incoming traffic across the instances, also acting as the public-facing entry point for the web servers.

Client Site Deploy

The client-side code was relatively easier to deploy. All API calls had to be updated to reference the ALB DNS, then the client code was copied into an S3 bucket and configured as a static website (again using the AWS CLI).

Adding S3 Functionality

At this point, the cloud-deployed site worked! Everything communicated with each other within the cloud in the most secure way possible, following architecture principles that ensured the app would always be running reliably and efficiently. But there were a few more aspects of cloud computing with which I wanted to familiarize myself that were not covered in the base app, so I set about adding new functionality involving S3 buckets, Lambda, the AWS SDK, and (more) IAM.

Initial Updates

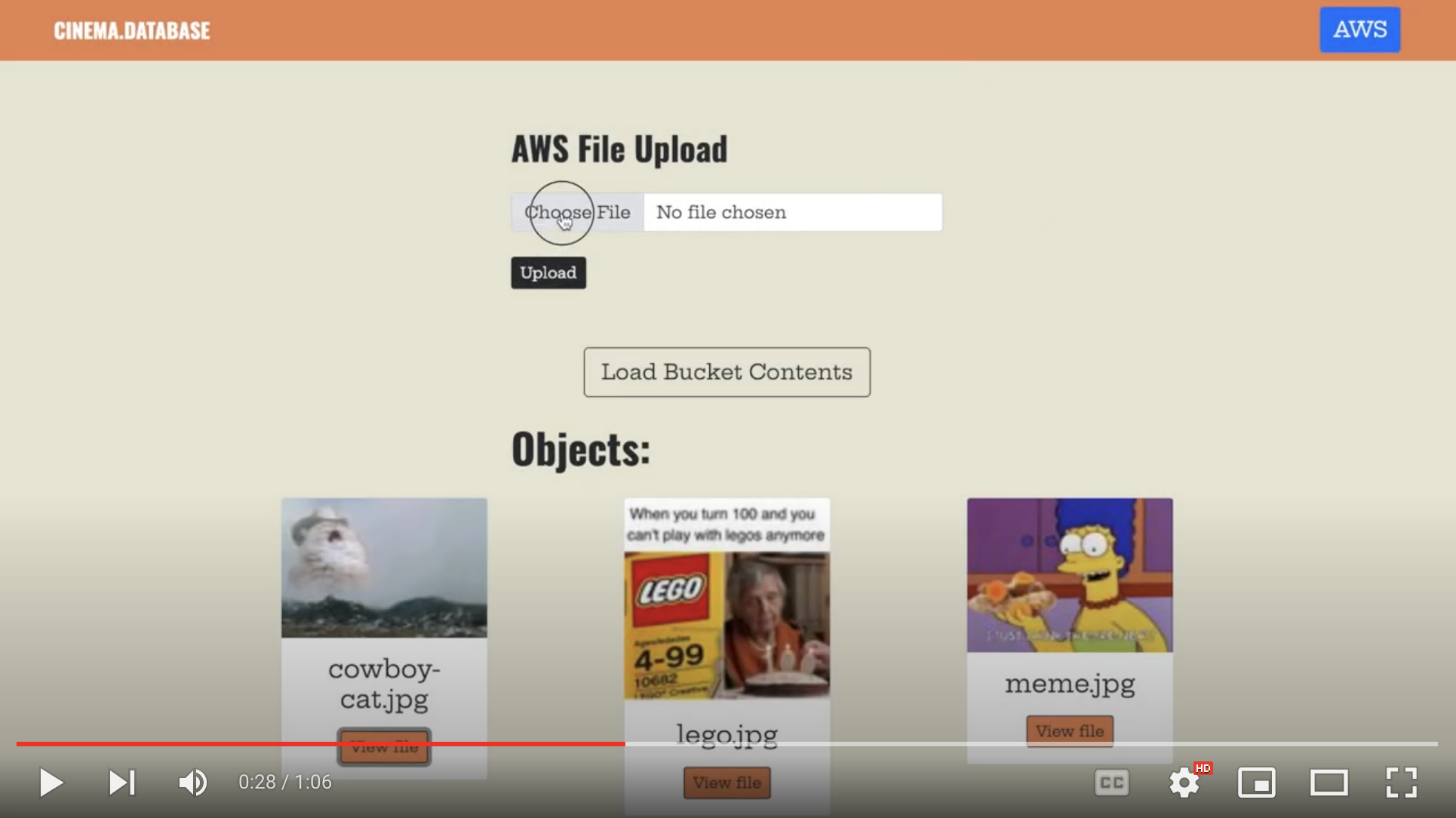

The first half of this undertaking required getting a little familiar with the AWS SDK so that users could interact with the contents of the S3 bucket right from the app. I started by adding a few new endpoints to the API that could upload an image, get a list of images, and get a specific image from the bucket, using the AWS SDK. I then added some simple UI elements in the React frontend code (pictured below), and simply tied these to the newly created endpoints in the API.

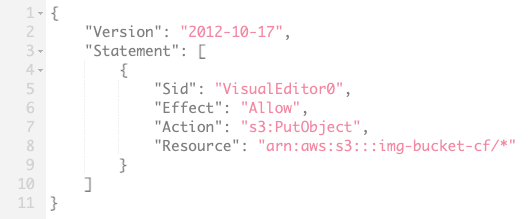

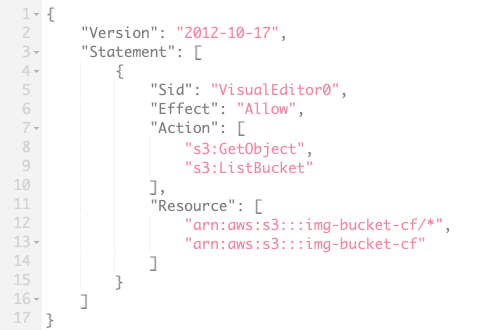

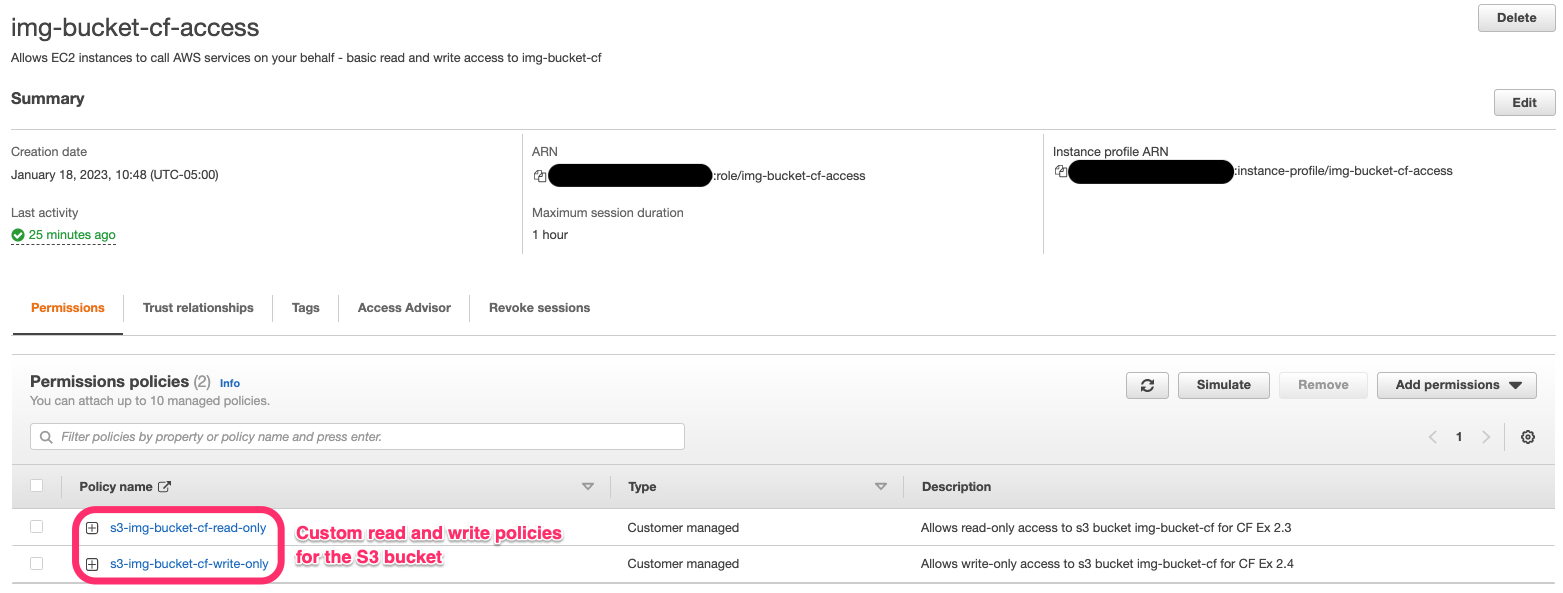

This also required setting up a new IAM role that allowed read/write access to the bucket for the EC2 web server. This was as simple as creating read-only and write-only policies for my image bucket, then attaching these to a role which could be assumed by an EC2 instance.

Addition of Lambda

Finally, to make use of a simple Lambda function, I set about adding thumbnail functionality to these features. This meant that when a user uploaded an image, the Lambda function would be triggered to create and upload a separate thumbnail of the image, and this thumbnail is what would be seen when the user fetched a list of the images. The original image could still be viewed by clicking on one of the thumbnails.

Click to enlarge

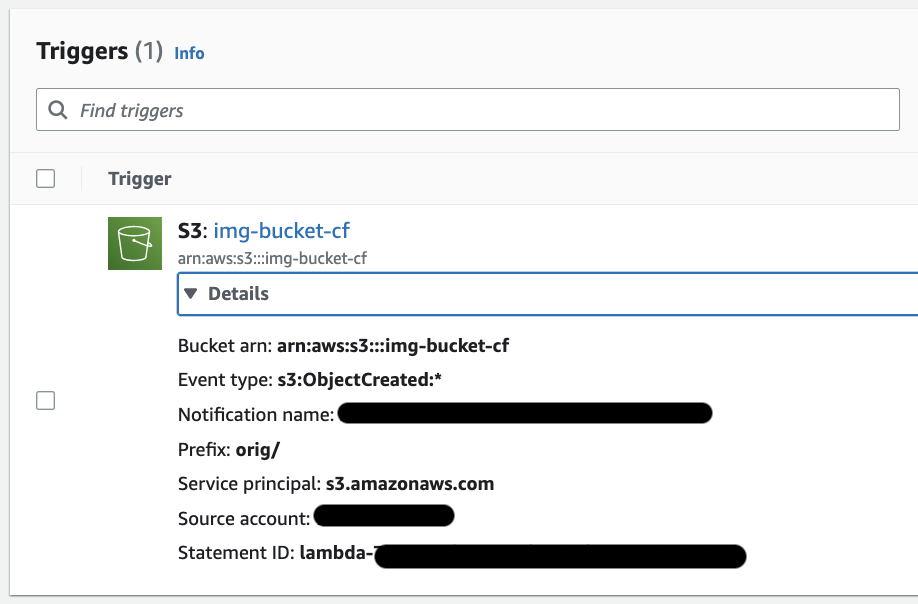

Trigger for the "create-thumbnail" Lambda function. Triggers when image uploaded with "orig/" prefix (i.e. uploaded from client site) and creates thumbnail of uploaded image

The Lambda handler function would create a thumbnail version of any new image added to the bucket, then upload this new thumbnail to the bucket with a different prefix using the SDK.

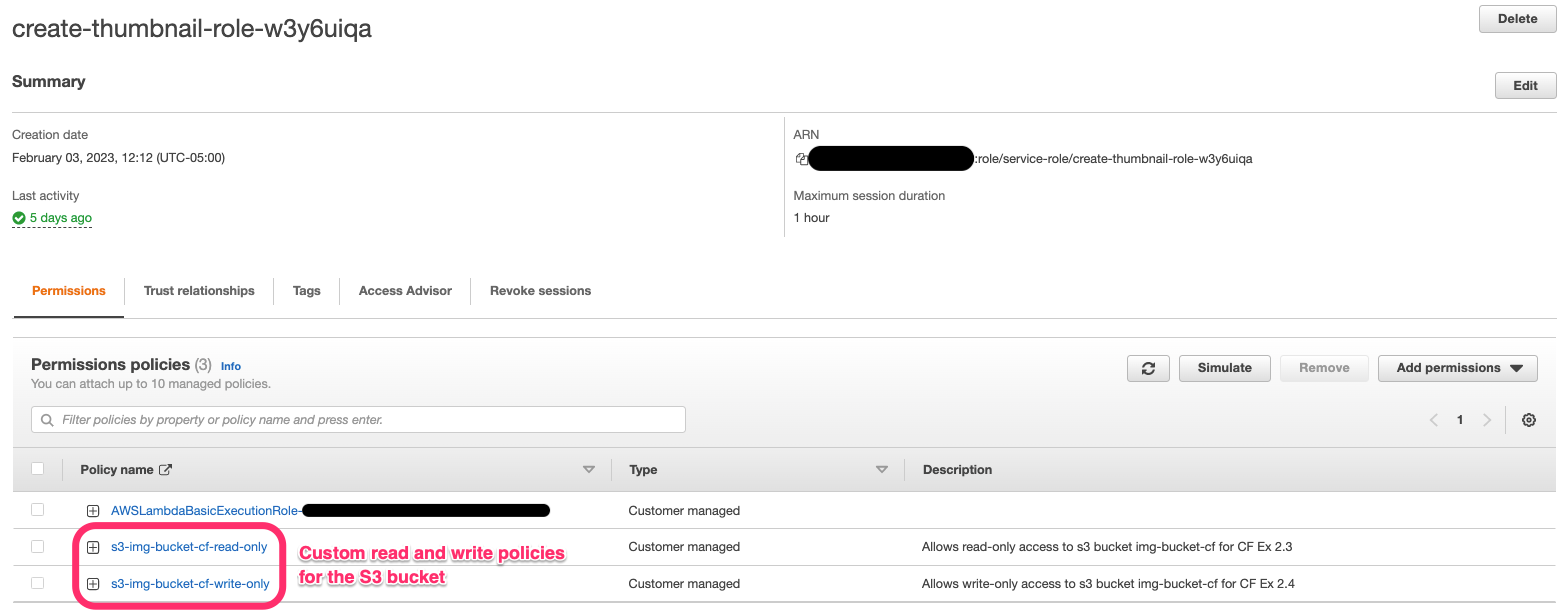

This also required creating another IAM role with read and write access to the bucket, this time to be assumed by a Lambda function.

Click to enlarge

IAM role for Lambda function allowing basic Lambda permission, plus read + write access to image bucket

Click to enlarge

IAM role for EC2 instances (web servers) allowing read + write access to image bucket

To avoid infinite recursion, the Lambda function would only trigger on new objects with the “orig/” prefix, which is what would be uploaded from the client site. For extra security, the handler function would also check for the thumbnail prefix and automatically return without uploading anything if it was detected.

And with that, the project was completed! Once I took screenshots and notes to properly document everything that was done, I shut down the site to save the cost of keeping all of these AWS services running, so the site is no longer live. If you want to see a sample of the app in action, check out this short YouTube demo:

Retrospective

Looking Back

My objective for this project was to learn how to use the fundamentals of AWS. As such, I was primarily focused on getting the AWS services up and running, and picking up these skills as I went. Some of these services - EC2 setup, ideal VPC configuration, S3 interaction, e.g. - I became very familiar and comfortable with, but a plethora of others - AWS-managed databases, dedicated security services, billing and management services, to name a few - remained untouched in my project.

If I were to start this project again, I would think of new ways to integrate even more AWS services, such as migrating my MongoDB database to AWS’s DynamoDB, setting up automated security services like GuardDuty and (Advanced) Shield, and exploring the Marketplace for any helpful third-party software.

Moving Forward

In conjunction with CareerFoundry’s Cloud Computing for Developers

course, I completed several official AWS training modules and dedicated some

extra time to studying in

preparation for the AWS Certified Cloud Practitioner exam. Shortly following my completion

of this project and the course, I sat the exam and received my official AWS CCP

certification.

extra time to studying in

preparation for the AWS Certified Cloud Practitioner exam. Shortly following my completion

of this project and the course, I sat the exam and received my official AWS CCP

certification.

It’s my hope that in earning this certification, as well as in completing and presenting this project, I have demonstrated my foundational knowledge of AWS services and how they can best be utilized to harness the power of the cloud.

Credits

Developed by

Design by

Project idea by